Loading...

9th January 2023

Can Artificial Intelligence replace fund managers?

Artificial intelligence (AI) has the potential to aid human fund managers in their decision-making process and improve their efficiency, but it is unlikely that it will fully replace them. Fund management involves a variety of tasks, such as portfolio construction, risk management, and client communication, which require human judgement, intuition, and emotional intelligence. AI can assist with tasks such as analysing market data, identifying trends, and generating investment ideas, but it cannot replicate the full range of skills and expertise that human fund managers bring to the table. It is more likely that AI will be used as a tool to augment the work of human fund managers, rather than replacing them entirely.

OK, I confess, I didn’t write the last paragraph. I typed my opening question, with a little apprehension, into what is the latest Silicon Valley phenomenon, ChatGPT. GPT stands for Generative Pre-Trained Transformer and is a chatbot launched in November last year. It was built by OpenAI, an artificial intelligence research laboratory first set-up and funded in 2015 by Elon Musk, who’s now left the organisation, and some of Silicon Valley’s most successful tech entrepreneurs. It was established, in their words, to develop AI to benefit humanity as a whole and recognises many of the dangers the technology might present. Given its ethos, OpenAI was initially a non-profit organisation, although that was diluted somewhat in 2019 when Microsoft invested an additional one billion dollars, albeit with a profit cap.

If you haven’t heard of ChatGPT already, you soon will. Within one week of launch it had one million active users, making it the fastest roll-out in Silicon Valley history. Atlantic magazine described it as “The breakthrough of the year” for 2022 and Elon Musk himself, having seen the platform in action, tweeted “This is scary good, we are not far from dangerously strong AI”.

The chatbot, although only a beta version, is astonishing to use and its command of language is remarkable. Try asking it to write a poem on chess in the style of Donald Trump or testing it with more mundane requests - its suggested recipe for eggs benedict was just fine.

It's not without fault though, the current version has numerous technical limitations. For example, the model’s formal training was completed in 2021 and so many of its responses are not based on up-to-date information; in addition, many of its conclusions are simply wrong, particularly problematic as its language is so convincing that most users are, reportedly, still likely to believe them.

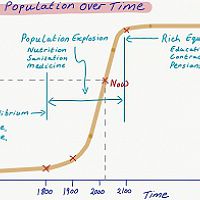

Although many of these technical problems will be ironed out with later releases, the ChatGPT phenomenon is also raising concerns at a grander level. High school students, for example, are already submitting essays written on the platform and so educationalists are worried, understandably, about the impact this might have on writing and critical thinking skills. Imagine an economics student, for example, being asked to write an essay outlining the main causes of inflation (see below) – it wouldn’t be awarded a Grade A by any means, but it breaks the back of the challenge and offers a good structure that the student could in-fill with a few examples.

On Complex Issues

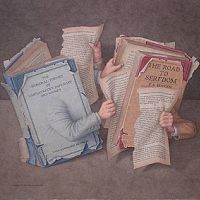

The inflation essay highlights a more fundamental challenge for this technology, however. Although it is coherent and serves, to a point, the immediate needs of the economics student, it doesn’t question or go beyond the bounds of its existing body of knowledge. This will, at best, limit its use when considering more complex and nuanced issues and, at worst, hinder the development of human understanding.

Ultimately ChatGPT’s response to any query or requested task is based on what it has been fed, either by OpenAI or by its users. It is not connected to the internet, but works from a massive corpus of texts in numerous languages. It does not, therefore, challenge the conclusions that can only be drawn from this data set. It cannot challenge the premise of a question, or the assumptions behind the answers its dataset can provide. In fact, the technology behind ChatGPT, arguably, pours concrete on established understanding and hinders the dissent which is, most often, at the genesis of human progress. If OpenAI had existed in 1914 it would, no doubt, have been able to navigate Newtonian ideas with ease, but would it have played any meaningful role in the creation of Einstein’s theory of General Relativity?

The chatbot is built on top of OpenAI’s GPT-3.5 set of so-called Large Language Models and is finessed with deep-learning techniques such as supervised and reinforcement learning, both of which use human “trainers” to improve the models’ performance[i]. As OpenAI continues to gather data from ChatGPT users, that is now also used to train the language models and build the database driving the platform's responses. One feature of this ongoing training is that users can upvote and downvote ChatGPT’s output. This could, in theory, lead to an extreme form of group-think, which makes it difficult for dissenters (innovators) to be heard. Moreover, queries are filtered through OpenAI’s moderation API to prevent offence. If the definition of “offence” is too broad, this might make it difficult for the platform’s base of knowledge to develop in a healthy way. - George Bernard Shaw’s “Unreasonable Man” might not get a look in.

To test ChatGPT on a more complex subject I picked the most challenging issue the investment industry is likely to face in 2023, the future of ESG. Having become a cornerstone of our industry since the acronym appeared in a UN report in 2004, there’s a growing backlash against it, particularly in the US.

Warren Buffet, when faced with a proposal to make Berkshire Hathaway report extensively on its ESG credentials, described it as “asinine” and Elon Musk went as far as to call it a “scam”. At a more practical level, a growing list of US state treasurers have blacklisted banks who use ESG metrics when determining funding costs, arguing that they penalise essential industries such as oil, gas, steel and transportation.

This backlash is rooted in the belief that the activities of investment firms, banks and other corporations should not be driven by political agendas set elsewhere, often outside of a democratic process. It was a concern raised already in 1970 by Milton Friedmann in his New York Times article “The Social Responsibility of Business is to Increase Its Profits” in which he argued that a CEO who pursues a social agenda beyond their business remit is in effect imposing a tax on its shareholders and employees – Friedmann saw this as “taxation without representation”. His argument has been picked up by biotech entrepreneur Vivek Ramaswamy whose 2020 Wall Street Journal article, “Stakeholders” vs. the People, argued that it should be “Citizens—not the church, not corporate leaders, not large asset managers— [that] define the common good through the democratic process”.

So as ESG comes up against some fundamental challenges I wondered how OpenAI would “think” about these, and so I simply asked it, “What are the main flaws in ESG?”.

Once again, its response was articulate and coherent. It succinctly described what ESG is and went on to outline several “structural” flaws; the lack of consistency and standardization, the subjectivity when assessing ESG factors, the limited coverage, the lack of transparency in the way ESG data is reported and so on.

What it didn’t do was explore the deeper, more fundamental, questions now being asked by politicians and industry practitioners alike. It answered the questions within the confines of the ESG framework and, given how the algorithm works, this makes sense. If most of the ESG information within OpenAI's corpus of text was created by the ESG industry, then the model is overwhelmingly likely to create an answer based on that information. Moreover, if users asking that question are supportive of ESG then they are more likely to upvote responses which are unquestioning of ESG’s fundamental legitimacy. In effect, “dissenting” challenges, as we now see emerging, are suppressed on the system.

Impact

Whatever the limitations of ChatGPT, this is the year AI goes mainstream. Moreover, the current release is a toddler in technology terms, GPT-4 is expected by the end of 2023 and the chatbot itself is rumoured not to be the best one in the OpenAI stable.

For now at least, some knowledge workers might rightly become anxious. Ask it to write a programme in Python to monitor the price of S&P 500 constituents, no problem (it even explains how the programme works) or ask it to draw up a simple Loan Note Agreement, easy (although it does recommend talking to a lawyer for more complex agreements).

The biggest threat, however, might be faced by Alphabet - OpenAI’s Chief Scientist, Ilya Sutskever was formerly Google’s expert on machine learning. As ChatGPT doesn’t have access to the internet it cannot, for now at least, replace a search engine. For many straightforward queries, however, it is much more efficient than a search engine - whereas Google returns a list of links, from which the user needs to find the most useful, ChatGPT answers questions and responds to requests directly. Even if these straightforward queries only make up a small portion of overall queries, then that could spell trouble for Google whose revenue overwhelmingly comes from adverts on searches. Moreover, Microsoft is reportedly looking to integrate the technology behind ChatGPT into its own search engine Bing, Google’s smaller competitor.

The competitive threat aside, the newcomer might also create another problem for Google. If the Web is rapidly filled with AI generated content, a portion of which is factually incorrect, then it will be more difficult for Google to fulfil its core mission of returning the most appropriate answers to each query. The blogging site, Substack, has already banned material produced by GPT and it is for this reason that Alphabet itself is cautious about launching its own AI driven chatbot, Lamda (Language Model for Dialogue Applications).

At the very least it will change the way many people think and work, in some cases it will replace them altogether. Likewise, it will in time impact the efficiency and competitive position of many corporations, not just in technology but everywhere.

Whatever this technology disrupts, however, the need for critical thinking will remain as complex issues appear, requiring more nuanced analysis. Moreover, for human progress to continue we will always need dissent, dissent both liberates and stimulates our thinking, and it does so whether it’s right or wrong.

[i] In the supervised phase the models are given conversations in which the trainers play both the teacher and the AI assistant. In the reinforcement phase, the trainers rank the AI assistant’s responses with the model then being “rewarded” for providing high ranking answers in future conversations.

0

0

The Origin of Financial Crises

2

The Origin of Financial Crises

2

Build a company on prudence and trust, not debt

2

Build a company on prudence and trust, not debt

2

Regulating Psychopaths

2

Regulating Psychopaths

2

Depressed lobsters and the dividend yield trap

2

Depressed lobsters and the dividend yield trap

2

Meerkats and Market Behaviour - Thoughts on October's stock market fall

2

Meerkats and Market Behaviour - Thoughts on October's stock market fall

2

Captain Kirk and the science of economics

2

Captain Kirk and the science of economics

2

A New Maestro? Observations on an important speech by Fed Chairman Powell

2

A New Maestro? Observations on an important speech by Fed Chairman Powell

2

Inflation Really is Caused by Governments - Some thoughts from '74

2

Inflation Really is Caused by Governments - Some thoughts from '74

2

In Search of Stability & Growth - If only Europe was more like the US

2

In Search of Stability & Growth - If only Europe was more like the US

2

2016: A Tale of Two Walls

2

2016: A Tale of Two Walls

2

Modern Monetary Theory - The Magic Money Tree

2

Modern Monetary Theory - The Magic Money Tree

2

Norway Moves to America - Mean reversion and industrial revolutions

2

Norway Moves to America - Mean reversion and industrial revolutions

2

Debt & the magical mathematics of Brahmagupta

2

Debt & the magical mathematics of Brahmagupta

2

Money, Blood and Revolution

2

Money, Blood and Revolution

2

Debtonator - How Equity Can Work for All of US

2

Debtonator - How Equity Can Work for All of US

2

Hanging the Wrong Contract?

2

Hanging the Wrong Contract?

2

Lockdown: What did we get? Why did we do it?

2

Lockdown: What did we get? Why did we do it?

2

Facts not Opinions

2

Facts not Opinions

2

Hedonism and the value of money - Part II

2

Hedonism and the value of money - Part II

2

Is corporate debt addictive?

2

Is corporate debt addictive?

2

'Fixing Economics' by George Cooper: Book Review

2

'Fixing Economics' by George Cooper: Book Review

2

Luddites and the New Social Revolution

2

Luddites and the New Social Revolution

2

Public Deficits, Private Profits

2

Public Deficits, Private Profits

2

Crisis Economics

2

Crisis Economics

2

While Stocks Last - Reflections on the share buyback debate

2

While Stocks Last - Reflections on the share buyback debate

2

Hedonism and the value of money - Part I

2

Hedonism and the value of money - Part I

2

The Anxiety Machine - The end of the world isn't nigh

2

The Anxiety Machine - The end of the world isn't nigh

2

Over Easy - Can Monetary Policy Become Self-Defeating?

2

Over Easy - Can Monetary Policy Become Self-Defeating?

2

No Hard Promises - thoughts on inflation after COVID-19

2

No Hard Promises - thoughts on inflation after COVID-19

2

Barney's P Curve - Why we expect higher inflation

2

Barney's P Curve - Why we expect higher inflation

2

When Science Fails

2

When Science Fails

2

Tales of an Astronaut - Lessons from the Unknown

2

Tales of an Astronaut - Lessons from the Unknown

2

Jubilee - A time for borrowers to celebrate?

2

Jubilee - A time for borrowers to celebrate?

2

Revival of the Fittest

2

Revival of the Fittest

2

An Impossible Trinity?

2

An Impossible Trinity?

2

Investment Letter - Constant Reformation

2

Register for Updates

12345678

-2

Investment Letter - Constant Reformation

2

Register for Updates

12345678

-2